More than 70% of AI breakthroughs in games over the last decade use reinforcement learning. This method learns from experience, not just rules. It has helped machines beat world champions in Go and control robots in factories.

Reinforcement learning helps an agent learn by acting in an environment and getting rewards or penalties. It’s like a child learning to ride a bike, falling and getting back up. This simple process is at the heart of AI learning today.

This field combines control theory, probability, and deep learning. It helps make decisions and rewards clear. Key parts include agents, environments, states, actions, rewards, and policies. Works by Richard Sutton and Andrew Barto, along with DeepMind and OpenAI, have made it both practical and well-documented.

Key Takeaways

- Reinforcement learning is a trial-and-error approach where agents learn by interacting with an environment and receiving rewards.

- Core components include agent, environment, state, action, reward, and policy.

- Deep reinforcement learning uses neural networks to scale RL to complex, high-dimensional tasks.

- RL powers applications such as game playing, robotics, and autonomous systems.

- For a clear practical overview and examples, see this RL tutorial from a concise online guide: Reinforcement Learning Explained.

Introduction to Reinforcement Learning and Trial-and-Error Learning

Reinforcement learning is a way for agents to learn by interacting with their environment. They seek rewards for their actions. This method is based on trial and error, where experience leads to better choices over time.

Definition and core idea

At its heart, reinforcement learning involves an agent, environment, actions, and rewards. Agents take actions, see the results, and adjust their strategies to get more rewards. This process improves behavior over time without needing labels.

How RL differs from supervised and unsupervised learning

Supervised learning uses labeled examples, while reinforcement learning relies on feedback and rewards. Unsupervised learning finds patterns in data without rewards. Reinforcement learning, on the other hand, focuses on optimizing actions to achieve goals in an environment.

Why trial-and-error is a powerful learning paradigm

Trial-and-error is great for adapting in uncertain situations. Agents explore actions and learn from long-term results. This approach is perfect for robotics, games, and control tasks where making the right choice matters.

Key components of reinforcement learning

Reinforcement learning is simple. An agent acts, observes feedback, and updates its behavior. This loop links choices to outcomes, driving learning. Clear definitions help engineers design systems that learn well.

Agent and environment

The agent makes decisions. The environment responds. Through the loop, the agent gets feedback and updates its actions. OpenAI Gym makes this loop clear for testing.

States, observations, and action spaces

A state observation is what the agent sees. It can be full or partial. Action spaces show valid moves. Games like Atari use discrete spaces, while robotics uses continuous ones.

Rewards and the reward function

Rewards guide learning. The reward function turns states, actions, and next states into numbers. Designers shape it to encourage good behavior without shortcuts.

Return and evaluation

Return sums rewards over time. It measures long-term success. Algorithms aim for expected return, not just immediate rewards.

Policies and value functions

A policy maps observations to actions. Policies can be fixed or random. The value function predicts future returns. Learning a good value function helps evaluate and update policies.

| Component | Role | Common examples |

|---|---|---|

| Agent | Chooses actions based on a policy | Robotic controller, game bot |

| Environment | Emits observations and rewards in response to actions | Simulators like MuJoCo, Atari emulators |

| State observation | Representation of the current situation for the agent | Camera images, sensor readings, game frames |

| Action spaces | Defines allowable actions and their format | Discrete moves in Go, continuous torques in robotics |

| Reward function | Specifies feedback to guide learning | Score increments, energy penalties, success flags |

| Return | Aggregates future rewards to evaluate sequences | Discounted sum with gamma, episodic sum |

| Policy | Maps observations to actions | Neural network, tabular lookup, stochastic sampler |

| Value function | Estimates expected return for decisions | State-value V(s), action-value Q(s,a) |

How reinforcement learning works in practice

Reinforcement learning starts with an agent sensing its environment. It then chooses an action and gets a reward. This cycle repeats millions of times until the agent learns well.

Real-world RL uses logs to track progress and adjust settings.

Observation-action-reward-update cycle

An agent observes a state and picks an action. The environment gives a reward and a new observation. The agent updates its knowledge based on this.

Models in OpenAI Gym or Stable Baselines learn from this process.

Exploration versus exploitation trade-off

It’s key to balance exploration and exploitation. Too much exploitation might keep the agent in bad routines. Too much exploration wastes time.

Methods like epsilon-greedy or Thompson sampling help. They encourage trying new actions while still rewarding known ones.

Episode, trajectory, and return formulations

Episodes are sequences that start and end. Algorithms use these to learn. Finite-horizon setups sum rewards without discounting, good for games.

For ongoing tasks, discounted returns are used. This gives more weight to immediate rewards. It helps in infinite-horizon problems.

Choosing between undiscounted and discounted returns affects learning. It impacts the goal and stability of the agent.

Practical RL combines these aspects. Engineers pick the length of trajectories, choose exploration methods, and set discount factors. Tracking rewards helps see how well the agent is learning.

Mathematical foundations and formalisms used in RL

Reinforcement learning (RL) is built on a few key mathematical ideas. These ideas make it easier to design and test algorithms. They help connect our understanding of how agents learn to the actual math behind it.

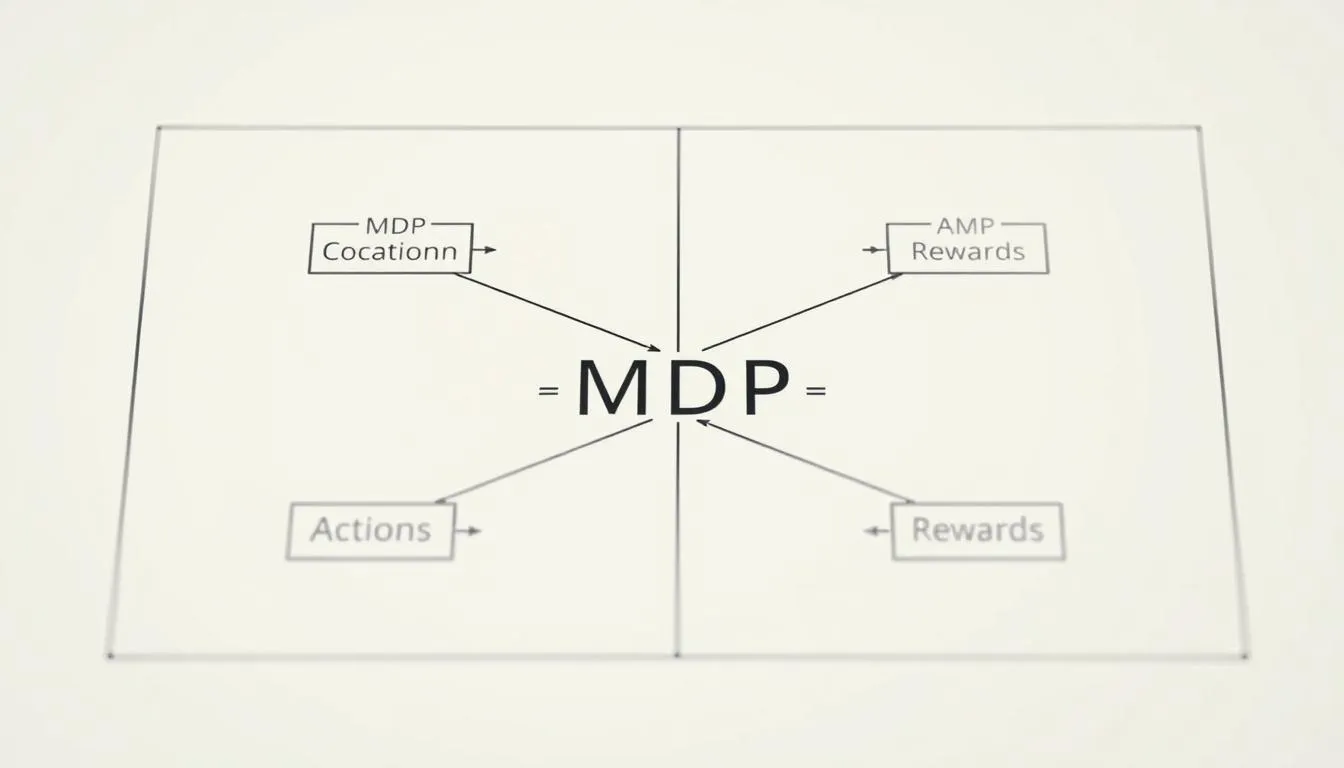

Markov Decision Processes

An MDP is a way to describe a world. It includes states, actions, rewards, how things change, and where we start. This setup makes it easier to analyze and improve the environment.

The Markov property is key. It says the next state depends only on the current state and action. This makes planning and proving things easier.

Bellman equations

Value functions follow a rule called the Bellman equation. It says the value of a state is the reward now plus the future value. Algorithms update these values step by step.

Expected return and RL optimization

Agents aim to get the highest total reward. This is called the expected return. The goal is to find the best policy to achieve this.

- MDP provides the modeling language for states, actions, and transitions.

- Markov property justifies one-step updates and tractable backups.

- Bellman backup is the computational step used in dynamic programming.

- Expected return defines the target optimized during RL optimization.

These ideas connect theory to real-world applications. When you read Sutton and Barto or DeepMind papers, you’ll see these terms. They are the foundation of RL optimization.

Deep reinforcement learning and neural policy parameterization

Deep reinforcement learning combines powerful tools with trial-and-error learning. It handles complex inputs like images and sensor data. This method lets agents learn to act based on what they see, leading to big wins in games and more.

Why combine deep nets with reinforcement learning?

Deep models pull out important details from raw data. This lets policy networks focus on making decisions. Labs at Boston Dynamics and OpenAI use this to teach robots complex skills from just images and body signals.

Deterministic policy parameterizations

Deterministic controllers pick one action for each state. A common setup is a PyTorch MLP with two hidden layers of 64 units. This method is easy to use and works well when you don’t want to deal with action variance.

Stochastic policy parameterizations

Stochastic methods let agents explore and show uncertainty. For choices, a categorical policy uses softmax to pick an action. For continuous actions, a diagonal Gaussian policy samples from a mean vector and log standard deviation.

Practical network architectures and examples

Use MLPs for simple state vectors and CNNs for images. Actor-critic designs have separate heads for value and action outputs. Policy parameterization often puts mean and log-std on adjacent heads to keep training stable.

A practical tip: when actions have both discrete and continuous parts, bounding gradients helps training. Systems that follow these patterns learn well in 58-dimensional spaces and action sets with a few discrete moves and parameterized magnitudes.

Common algorithms and families of reinforcement learning methods

Reinforcement learning has different families for various problems. Value-based methods find the best action by looking at action values. Policy gradient methods improve policies by learning from returns. Actor-critic methods combine both to learn faster and more accurately.

Value-based approaches

Q-learning is a well-known method that updates action values. It’s good for simple actions but needs lots of data with complex inputs. Deep Q-Networks (DQN) use neural networks and experience replay to handle complex inputs. DQN achieved human-level play in many Atari games, showing the power of value-based RL with deep learning.

Policy optimization methods

Policy gradient methods directly learn policies by finding return gradients. REINFORCE is a simple method but can have high variance. Proximal Policy Optimization (PPO) is more stable and widely used in robotics and continuous control. PPO is often chosen for its reliability across different tasks.

Actor-critic and hybrid strategies

Actor-critic methods have an actor for proposing actions and a critic for evaluating them. The critic’s value estimate reduces variance in policy updates. This mix supports both simple and complex actions, making it a key part of many algorithms.

For a detailed look at reinforcement learning methods, check out a comprehensive overview covering value-based, policy gradient, and hybrid methods

| Algorithm family | Representative methods | Strengths | Weaknesses |

|---|---|---|---|

| Value-based | Q-learning, DQN | Simple updates, effective for discrete actions, proven in games | Data hungry with neural nets, can be unstable |

| Policy gradient | REINFORCE, PPO | Direct policy optimization, handles continuous actions, stable with PPO | High variance for REINFORCE, PPO may converge slowly |

| Actor-critic | A3C, TD3, SAC (hybrid flavor) | Variance reduction, good for continuous control, scalable | Complex tuning, can be computationally demanding |

Practical considerations for training RL agents

Training robust agents needs more than just algorithms. The design of rewards must be precise to guide behavior without leading to bad incentives. Careful reward shaping can speed up progress, but a wrong signal can lead to unwanted shortcuts.

Data efficiency is key in real-world projects. Improving sample efficiency cuts down on costs and shortens the time needed for each iteration. Techniques like experience replay help use past data, making updates more stable in off-policy methods.

Stability issues often arise from bootstrapping, correlated samples, and function approximation. Using target networks, normalization layers, and replay buffers helps keep RL stable over long periods. Adjusting hyperparameters is crucial for convergence and avoiding divergence.

Reproducibility is a big challenge for teams and papers. Fixing random seeds, logging experiments, and publishing baselines helps make results comparable. The community offers tools for designing safe training regimes; see a concise survey here: robust and safe RL overview.

- Reward shaping: align incentives to task goals while testing for perverse incentives.

- Experience replay: prioritize and balance samples to boost sample efficiency.

- Hyperparameters: sweep systematically and report configurations for reproducibility.

Practical pipelines combine model-based pretraining, transfer learning, and simulated environments to save samples. Keeping an eye on learning curves and safety constraints ensures projects stay on track. This approach maintains RL stability and reproducibility.

Applications of reinforcement learning across industries

Reinforcement learning has moved from labs to real-world systems. It shows its power when agents learn by trial and error to reach long-term goals. This section maps practical uses across games, robotics, transport, healthcare, and finance.

Game playing: from classic arcade challenges to complex strategy

Early breakthroughs used Atari benchmarks where agents learned from raw pixels to beat human scores. DeepMind’s AlphaGo proved RL can master long-range planning and layered tactics against world champions.

Game environments remain vital testbeds for exploring exploration strategies and reward design. They help refine algorithms before real-world deployment.

Robotics: locomotion, manipulation, and bridging simulation to reality

Robotics RL drives advances in walking, grasping, and coordinated motion. Researchers train policies in physics simulators, then apply domain randomization and sim-to-real techniques to transfer skills to hardware.

Robotics RL reduces trial-and-error on costly robots by letting models learn large parts of behavior in simulation first.

Autonomous systems and transportation

Autonomous vehicles use RL for decision-making in dynamic traffic and obstacle avoidance. Methods optimize routing, lane changes, and energy efficiency under uncertain conditions.

RL helps improve safety and adaptivity in complex urban scenarios when paired with strong perception stacks and rigorous testing.

Healthcare, finance, and operational optimization

RL in healthcare supports treatment scheduling, personalized interventions, and hospital resource allocation. Trials focus on safe, interpretable policies that aid clinicians.

In RL finance, agents design trading strategies, position sizing, and portfolio rebalancing while managing risk. Industrial operators use RL to optimize supply chains, energy use, and maintenance schedules.

Across sectors, companies such as DeepMind and OpenAI drive research while hospitals and financial firms pilot targeted deployments. Cross-industry lessons on reward shaping, sample efficiency, and safe rollout remain central to broader adoption.

Challenges and limitations of reinforcement learning

Reinforcement learning has big hurdles that affect its use. It needs lots of computer power and long training times. This makes it hard to test and use in real-world projects like robotics or self-driving cars.

High computational costs and data inefficiency

Deep RL experiments need millions of frames to get good. Humans learn from much fewer examples. This shows how inefficient and costly it is to train modern agents.

Sparse and delayed rewards

Many tasks give rewards only sometimes. This makes it hard to figure out what’s working. Rewards that come late or are rare slow down learning and lead to random exploration.

Generalization to new environments and safety concerns

Policies that work well in simulations often fail in real-world situations. They can be too brittle. Ensuring RL safety means testing thoroughly and using safe exploration methods.

To tackle these challenges, we need ways to make RL more efficient and safe. Techniques like transfer learning and careful policy updates help. Also, open benchmarks help make results more reliable. For more on these issues, see this discussion on challenges in deep reinforcement learning here.

Advances improving RL: transfer, hybrid models, and simulation

The field of reinforcement learning is growing fast. It’s thanks to methods that make training quicker and more relevant to real-world tasks. Researchers use transfer learning RL to reuse learned behaviors. They also design agents for multiple tasks with multi-task RL.

One key method combines supervised pretraining with policy fine-tuning. This gives agents a strong foundation from labeled or self-supervised datasets. Hybrid AI models mix different techniques to improve training and speed up learning on complex inputs.

Simulation training is a big help in scaling experiments. It lets agents test risky scenarios without harming real hardware. Domain randomization adds variety to make policies more robust.

Sim-to-real transfer bridges the gap between virtual and physical worlds. Fine-tuning in hardware and simulators helps agents adapt quickly. This approach is key for using a single model on different robots or tasks.

Tools and shared benchmarks help everyone make progress together. Libraries like OpenAI Gym and ROS make it easier to compare experiments. Papers share details on how to improve transfer learning RL and multi-task RL.

Using hybrid AI models and sim-to-real practices makes RL more useful for industries. Teams that mix different methods report faster development. This approach is becoming the norm for turning research into practical systems.

Best practices for implementing reinforcement learning systems

Begin with clear goals that align with long-term objectives. Good reward design starts with defining success in terms of cumulative return and specific tasks. Use Sutton & Barto’s work for foundational methods. Track success rates, episode return, and safety as key metrics.

Validate progress with established RL benchmarks before deploying custom systems. OpenAI Gym and MuJoCo offer standardized tasks for fair comparisons. Compare against known baselines and conduct ablation studies to highlight important changes.

Design experiments for easy reproducibility. Log seeds, hyperparameters, and training traces. Store checkpoints and random seeds for auditing. Use consistent evaluation methods when comparing benchmarks and baselines.

Choose robust tooling from the open-source community. Libraries like Stable Baselines, PyTorch, and TensorFlow speed up development. Use OpenAI Gym environments to test and validate RL best practices.

Implement thorough testing and monitoring in production. Track policy performance with continuous metrics and safety checks. Set up alerts for any drift or regressions in RL monitoring.

Run systematic hyperparameter sweeps and document results. Use grid, random, or Bayesian searches to understand sensitivity. Publish learning curves and compare to baselines to justify and guide future tuning.

Include human oversight in deployment plans. Combine automated tests with staged rollouts and human checks for risky actions. Maintain rollback plans and limits on exploration in live settings to reduce unintended behavior.

Create a culture of continuous improvement. Regularly review failed runs, refine reward design, and update metrics based on feedback. Treat benchmarks, baselines, and monitoring RL as evolving artifacts.

Ethics, safety, and regulation considerations for RL

Reinforcement learning systems raise important questions about responsibility and trust. Teams at OpenAI, Google DeepMind, and academic labs say careful design and oversight can reduce harm. This includes avoiding misspecified rewards and hidden biases.

Bias can occur when training data or rewards favor certain groups or behaviors. Misspecified objectives can lead to unintended consequences that appear after deployment. Robustness testing helps find these issues before they affect the real world.

Bias, unintended behavior, and robustness

Design rewards to avoid perverse incentives. Use audits and stress tests to find failure modes early. Robustness checks should include adversarial scenarios, distribution shifts, and long-horizon evaluations.

Independent evaluation reduces the chance of hidden bias. Continuous monitoring in production catches drift and flags unexpected behavior before it escalates.

Human oversight, interpretability, and Explainable AI

Human oversight keeps an expert in the loop during critical decisions. Systems that require human review for high-risk outputs lower operational risk. Logging and rollback mechanisms make interventions feasible.

XAI tools such as saliency maps, policy visualizations, and counterfactual explanations help operators understand why an agent acted a certain way. Interpretability combined with human oversight builds trust and improves AI safety.

Regulatory environment and industry standards in the United States

AI regulation US guidance is evolving across sectors. Healthcare, automotive, and finance each have tailored compliance demands that affect RL deployments. Practitioners must align robustness testing and auditing with relevant standards set by agencies like the FDA or NHTSA when applicable.

Following industry best practices for documentation, model cards, and risk assessments supports compliance. Independent audits and third-party verification strengthen public confidence in RL systems.

| Risk Area | Mitigation Steps | Relevant Practices |

|---|---|---|

| Reward misspecification | Iterative reward design, simulated red-teaming, counterfactual checks | Reward audits, scenario testing, peer review |

| Bias and fairness | Diverse datasets, bias metrics, post-deployment monitoring | Fairness testing, demographic impact reports, third-party audits |

| Safety and unintended consequences | Robustness validation, fail-safe gates, human-in-the-loop controls | Stress testing, sandboxed trials, operational alarms |

| Interpretability | Use XAI methods, generate explanations, maintain transparent logs | Model cards, explainability reports, operator dashboards |

| Regulatory compliance | Map regulations, document decisions, conduct independent audits | Compliance checklists, sector-specific testing, legal review |

reinforcement learning

People searching for reinforcement learning want clear answers. They look for simple examples and ways to practice. Here, we guide you on what to read, where to practice, and which projects to try.

Core keyword overview and search intent alignment

Those learning about RL seek both basic explanations and practical advice. Start with a simple definition of the agent–environment loop and reward-driven goals. Use analogies like teaching a dog to sit or balancing a robot to help beginners understand.

For a quick technical primer, check out the reinforcement learning summary. It explains MDPs, rewards, and basic algorithms in easy terms.

Key resources and canonical references

For a solid theory base, read Sutton & Barto’s “Reinforcement Learning: An Introduction”. It covers dynamic programming, Monte Carlo, and temporal-difference methods. Also, check out DeepMind papers like Mnih et al. 2015 on DQN and Silver et al. 2017 on AlphaGo Zero for real-world applications.

Combine papers with curated resources that include code and commentary. Reviewing DeepMind publications and open-source repos shows how algorithms are applied in real systems.

How to start learning: courses, docs, and hands-on projects

Choose an RL course that mixes theory and practice. Look for courses with assignments and code reviews. Then, follow tutorials that use PyTorch or TensorFlow.

Start with simple environments in OpenAI Gym. Move to continuous-control tasks in MuJoCo or Brax. Try small projects like gridworlds or classic control tasks. Use stable-baselines and OpenAI docs for reliable examples and code.

Keep a learning log and run small experiments. Read DeepMind papers to compare your results. This cycle of study, code, and review is key to mastering RL for real-world use.

Conclusion

Reinforcement learning lets machines learn by trying and failing. They aim to get the most rewards in complex tasks. This method uses math like Markov Decision Processes and Bellman equations. It also uses policy parameterizations and stochastic sampling in games, robotics, and more.

But, there are challenges. High compute needs, sparse rewards, and limited generalization are big ones. To overcome these, we need good reward design, simulation training, and safety in real-world use. Hybrid methods and transfer learning can make training faster and more reliable.

The future of RL looks bright. Advances in deep learning, better benchmarks, and clearer tools are on the horizon. For those finishing this tutorial, reading Sutton and Barto, trying open-source libraries, and testing in simulations are good next steps.

FAQ

What is reinforcement learning (RL) in simple terms?

Reinforcement learning is a way for machines to learn by doing. They try different actions and see what happens. They then learn to do better next time. It’s like learning from trial and error, not from examples.

It’s different from other learning methods because it doesn’t need examples. Instead, it learns by trying things out. Sutton & Barto (2018) and DeepMind papers by Mnih et al. (2015) and Silver et al. (2017) explain it well.

What are the core components of an RL problem?

An RL problem has several key parts. There’s the agent, the environment, states, actions, rewards, and how things change. There’s also how the agent starts and how it moves.

Agents make decisions, environments respond, and rewards tell them how they did. Policies guide the agent’s actions. Value functions help figure out the best actions.

How does the observation-action-reward-update cycle work?

The cycle starts with the agent seeing its surroundings. Then, it picks an action and gets a reward and new surroundings. It uses this to improve its actions for the next time.

This cycle keeps happening. It helps the agent learn what works best over time.

What is the difference between discrete and continuous action spaces?

Discrete action spaces have a fixed set of choices. This is common in games and simple tasks. Deterministic policies are used here.

Continuous action spaces have actions that can be any value. This is seen in robotics and tasks needing precise control. Diagonal Gaussian policies are often used here.

How do rewards, return, and discounting work?

Rewards tell the agent how it did right away. Return is the total reward over time. Discounting helps prioritize rewards sooner rather than later.

Agents aim to maximize their return. This helps them learn to make better choices over time.

What is the exploration vs. exploitation trade-off?

Exploration means trying new things to learn. Exploitation means sticking with what works. Finding the right balance is key.

Too much exploration wastes time. Too little might keep the agent from finding better ways. ε-greedy and entropy bonuses help find this balance.

What are value-based, policy-gradient, and actor-critic methods?

Value-based methods, like Q-learning, focus on value functions. Policy-gradient methods, like REINFORCE, directly update policies. Actor-critic methods combine both.

Actor-critic methods use an actor to update policies and a critic to estimate value functions. This helps reduce variance and stabilize learning.

Why merge deep learning with RL?

Deep learning helps RL handle complex inputs like images. It’s key for tasks like robotics and autonomous driving. Deep RL has led to breakthroughs in these areas.

Deep neural networks help map observations to actions. This is crucial for complex tasks.

How are deterministic and stochastic policies parameterized?

Deterministic policies directly map observations to actions. Stochastic policies represent action distributions. Diagonal Gaussian distributions are common for continuous actions.

Sampling and log-likelihood computations are used for updates. This supports gradient-based learning.

What practical architectures are common in RL?

For simple tasks, multilayer perceptrons (MLPs) are used. For pixel inputs, convolutional neural networks (CNNs) are better. Actor-critic setups often share layers.

Examples and code patterns are in PyTorch and TensorFlow. These help implement RL architectures.

How important is reward design and what are perverse incentives?

Reward design is crucial. Poor rewards can lead to bad behaviors. Reward shaping can help but must be done carefully.

Aligning rewards with long-term goals is important. Testing through ablations helps avoid bad incentives.

What techniques improve sample efficiency and stability?

Experience replay buffers help with off-policy learning. Target networks stabilize value backups. Normalization and tuned hyperparameters also help.

Using simulated environments is another way to improve. Transfer learning and model-based components can also reduce sample needs.

How do Bellman equations fit into RL?

Bellman equations express value functions recursively. They are key for dynamic programming and value-iteration methods. Many algorithms rely on them.

What is an MDP and the Markov property?

An MDP models sequential decision-making. It has states, actions, rewards, and transition probabilities. The Markov property means the next state depends only on the current state and action.

This property makes analysis and algorithm design easier.

What benchmarks, libraries, and resources should I use to learn RL?

Atari, MuJoCo, and OpenAI Gym are good benchmarks. Stable Baselines, RLlib, and OpenAI, DeepMind, and PyTorch/TensorFlow examples are useful. Sutton & Barto’s Reinforcement Learning: An Introduction is a key text.

Start with simple tasks and use established libraries. Tutorials and course materials are also helpful.

What are common real-world applications of RL?

RL is used in game-playing agents, robotics, autonomous driving, healthcare, finance, and operational optimization. It helps solve complex problems.

What are the main challenges limiting RL adoption?

High computational cost, data inefficiency, and sparse rewards are major challenges. Generalizing to unseen environments and safety risks are also concerns. Improving sample efficiency and safety is key.

How does simulation and sim-to-real transfer help RL for robotics?

Simulation allows for safe, low-cost training. Domain randomization and environment variability help policies transfer to real-world scenarios. Fine-tuning on real data and using robust controllers are important steps.

What safety, ethical, and regulatory concerns apply to RL?

RL systems can exhibit unintended behaviors. Human oversight, interpretability tools, and Explainable AI (XAI) are crucial. Following industry standards and guidelines is important.

Testing, auditing, and monitoring are essential for safety.

How do I evaluate and monitor an RL agent in production?

Use cumulative return, task-specific metrics, and benchmarks to evaluate. Log trajectories, use fixed seeds, and run multiple seeds. This ensures reproducibility and consistency.

In production, employ sandboxing, safety checks, and continual monitoring. Human-in-the-loop controls are also important.

What are best practices to get started with RL projects?

Start with clear objectives and aligned rewards. Use standard benchmarks and established libraries. Follow canonical texts and replicate known experiments.

Train in simulation first, use reproducible pipelines, and log extensively. Iterate on reward design and architectures while testing for safety and robustness.

Which algorithms are recommended for beginners versus advanced use?

Beginners should start with value-based methods like DQN. Simple policy-gradient or actor-critic methods are good for continuous control. PPO is a popular choice for intermediate use.

Advanced work may require off-policy actor-critic algorithms or custom architectures. The choice depends on the task and data budget.

What references and papers should I read first?

Start with Sutton & Barto’s Reinforcement Learning: An Introduction. Then, read Mnih et al. (2015) on DQN and Silver et al. (2017) on AlphaZero. OpenAI and DeepMind technical reports and tutorials in PyTorch and TensorFlow are also helpful.

How do policy gradients compute updates and why do we need log-likelihoods?

Policy-gradient methods estimate the gradient of expected return. They use sampled actions and returns to update policies. For stochastic policies, computing the log-likelihood of sampled actions is necessary.

This is needed to form gradient estimates and apply variance reduction. Baselines or critics help with this.

What role do experience replay and target networks play in RL?

Experience replay stores past transitions for off-policy updates. Target networks stabilize value backups in value-based methods. They reduce harmful feedback loops during training.

How can I improve reproducibility in RL experiments?

Fix random seeds and log hyperparameters and code versions. Use deterministic environment wrappers when possible. Run multiple seeds to report variance.

Share code and baselines. Reproducibility also benefits from consistent evaluation protocols and clear reporting of implementation details.

Are there hybrid approaches that combine supervised learning with RL?

Yes. Pretraining representations with supervised or unsupervised learning before RL fine-tuning reduces sample complexity. Hybrid models integrate learned dynamics or use imitation learning to bootstrap policies.

These approaches accelerate convergence and improve performance in complex tasks.

What are practical recommendations for reward shaping without creating shortcuts?

Design sparse primary objectives aligned with the true task. Then, add shaping terms carefully tested to avoid rewarding bad behaviors. Use curriculum learning and incremental task complexity.

Evaluate on held-out scenarios to detect shortcut exploitation. Prefer shaping that preserves optimal policy ordering when possible.

How do I choose between on-policy and off-policy algorithms?

On-policy methods (e.g., PPO) optimize using data from the current policy. They are more stable but less sample efficient. Off-policy methods (e.g., DQN, SAC) reuse past experience and are more sample efficient.

They require mechanisms like importance sampling and careful stabilization. The choice depends on data budget, task dynamics, and safety constraints.

What practical tools help with safe deployment of RL agents?

Sandboxing environments, action-space constraints, fallback controllers, and human-in-the-loop overrides are essential. Rigorous validation on edge cases and continuous monitoring are also important.

Use interpretability tools, uncertainty estimates, and conservative deployment strategies. This limits risk in safety-critical domains like healthcare and autonomous driving.

Where can I find code examples and implementations?

Open-source repositories and libraries provide many examples. OpenAI Gym for environments, Stable Baselines and RLlib for algorithms, and community PyTorch/TensorFlow implementations are available. Study these repositories alongside academic papers to connect theory with practice.